Regression How to Upload Data From Drop Box

Sync is a hard distributed systems problem and re-writing the heart of our sync engine on the desktop customer was a awe-inspiring effort. We've previously discussed our efforts to heavily exam durability at different layers of the system. Today, we are going to talk near how we ensured the performance of our new sync engine.

In particular, we describe a performance regression testing framework we phone call Apogee. Apogee helps united states of america find unanticipated functioning issues in the development process and safeguard against bugs that we would otherwise release to our users. As nosotros developed our new sync engine, we used Apogee to compare the performance of new vs. old, ensuring that the Dropbox sync feel didn't endure when nosotros rolled Nucleus out to our users. When nosotros specifically sought to improve sync performance, we used Apogee equally pre-release validation that our improvements had the intended bear on. In this postal service, we'll be covering Apogee's arrangement pattern, how we overcame challenges nosotros faced while building it, and cease by discussing a few performance regressions it caught for united states of america over the past 2 years.

Blueprint

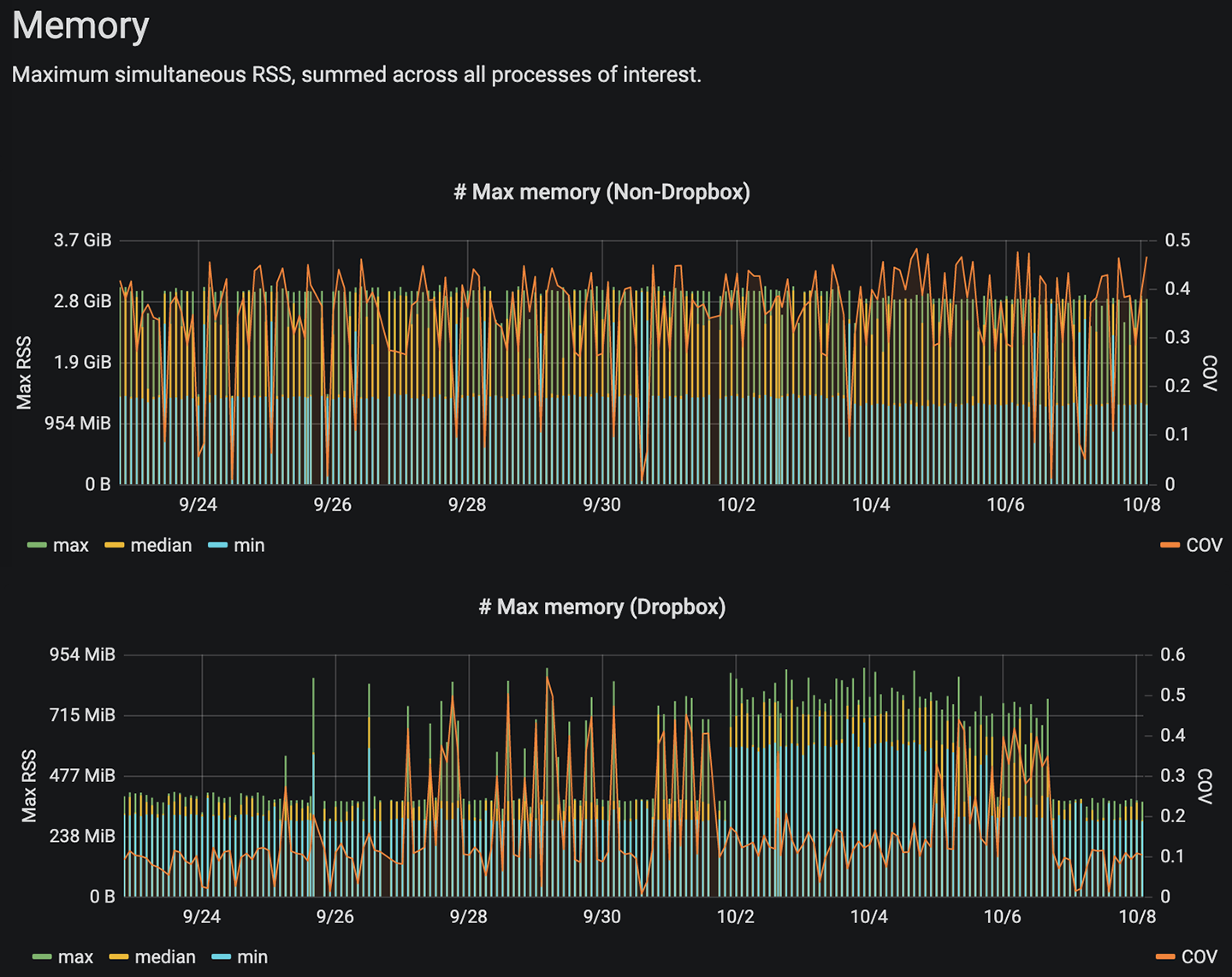

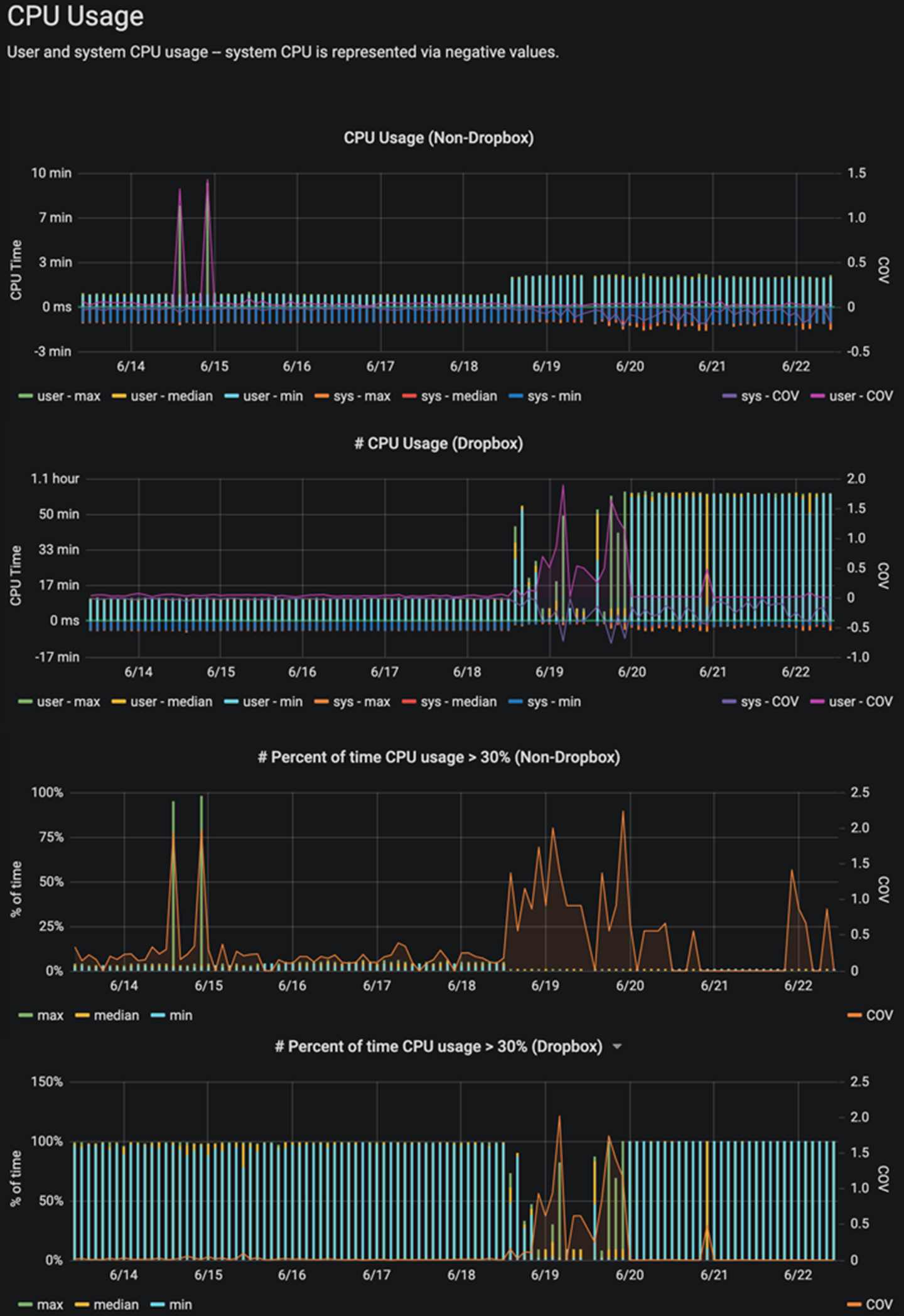

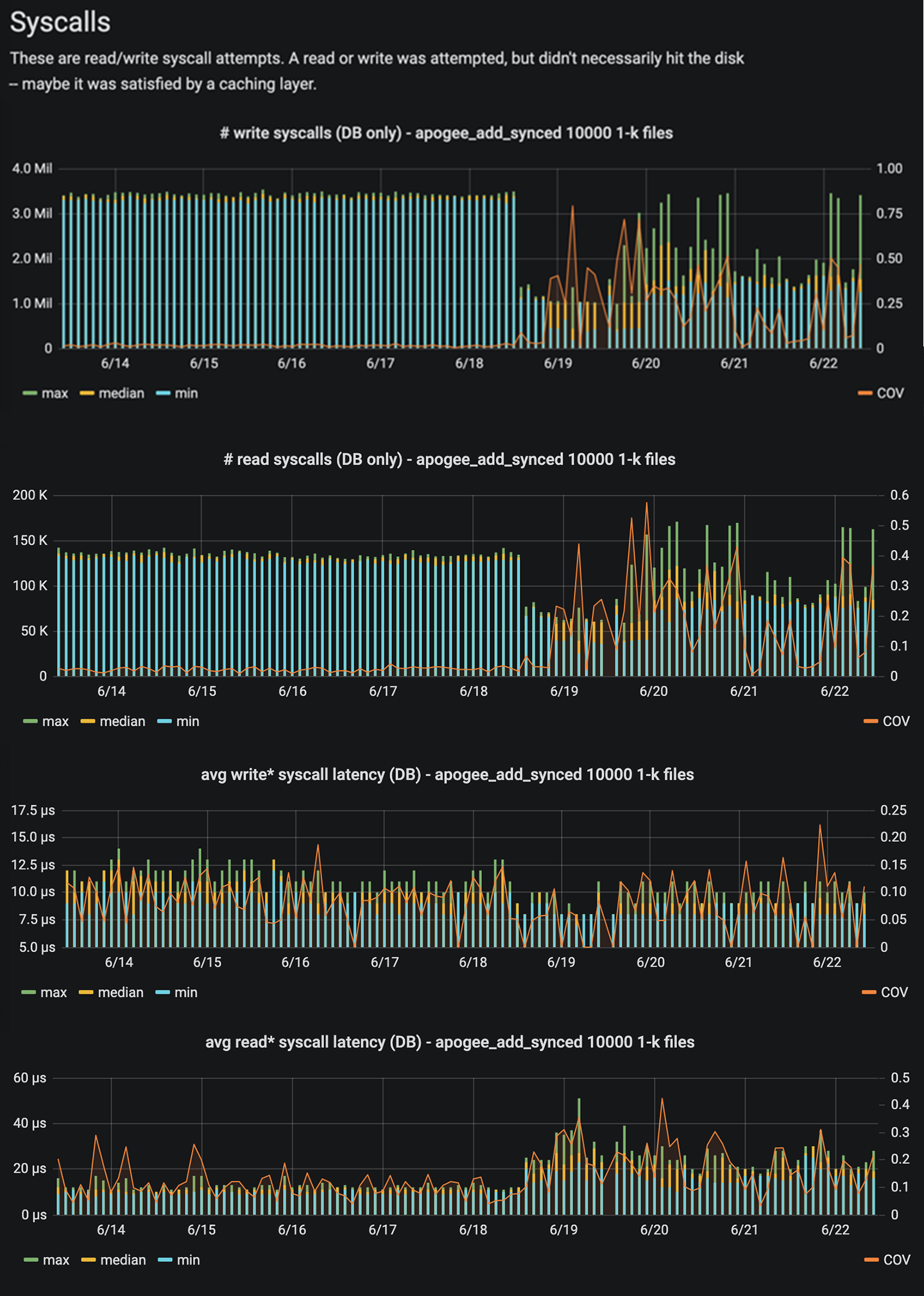

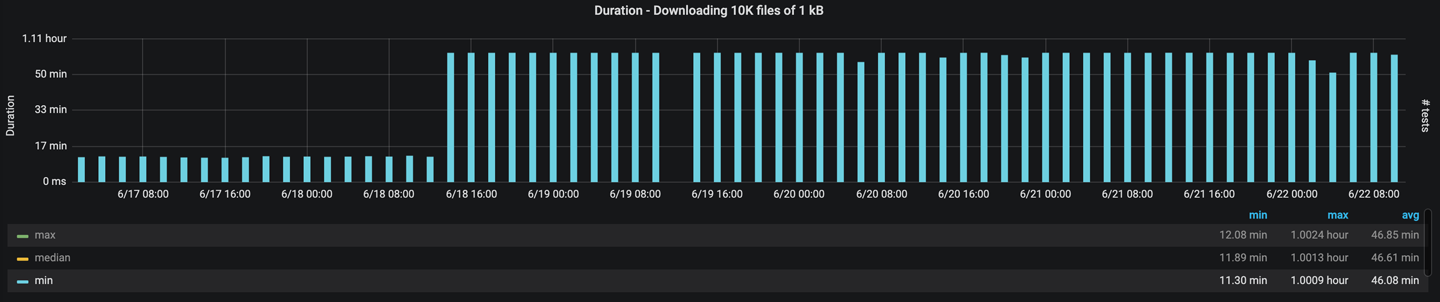

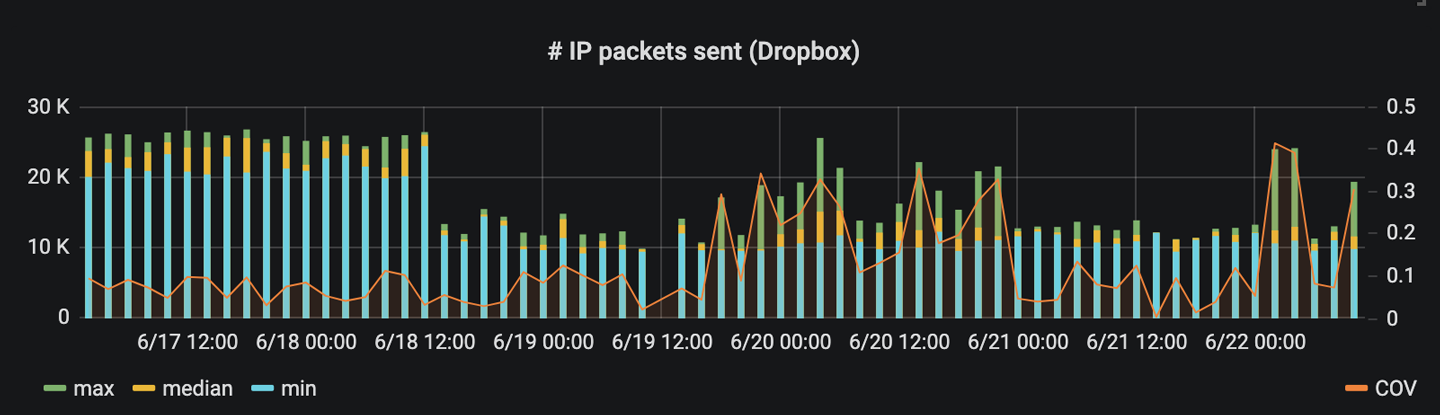

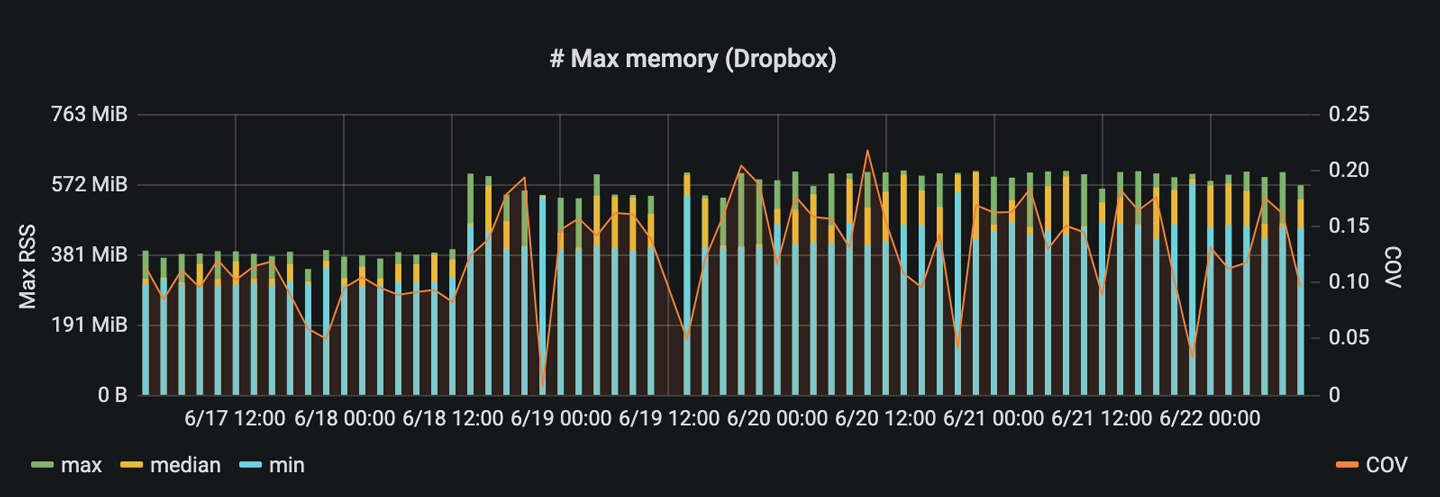

Apogee measures functioning metrics (latency, cpu usage, disk i/o, memory usage, and network i/o) while running our existing end-to-terminate integration tests. In tests, this looks similar a context manager that tin can be placed above a span of sync operations. The contour data can exist annotated with key-value pairs that allow us to slice results based on the activity beingness profiled.

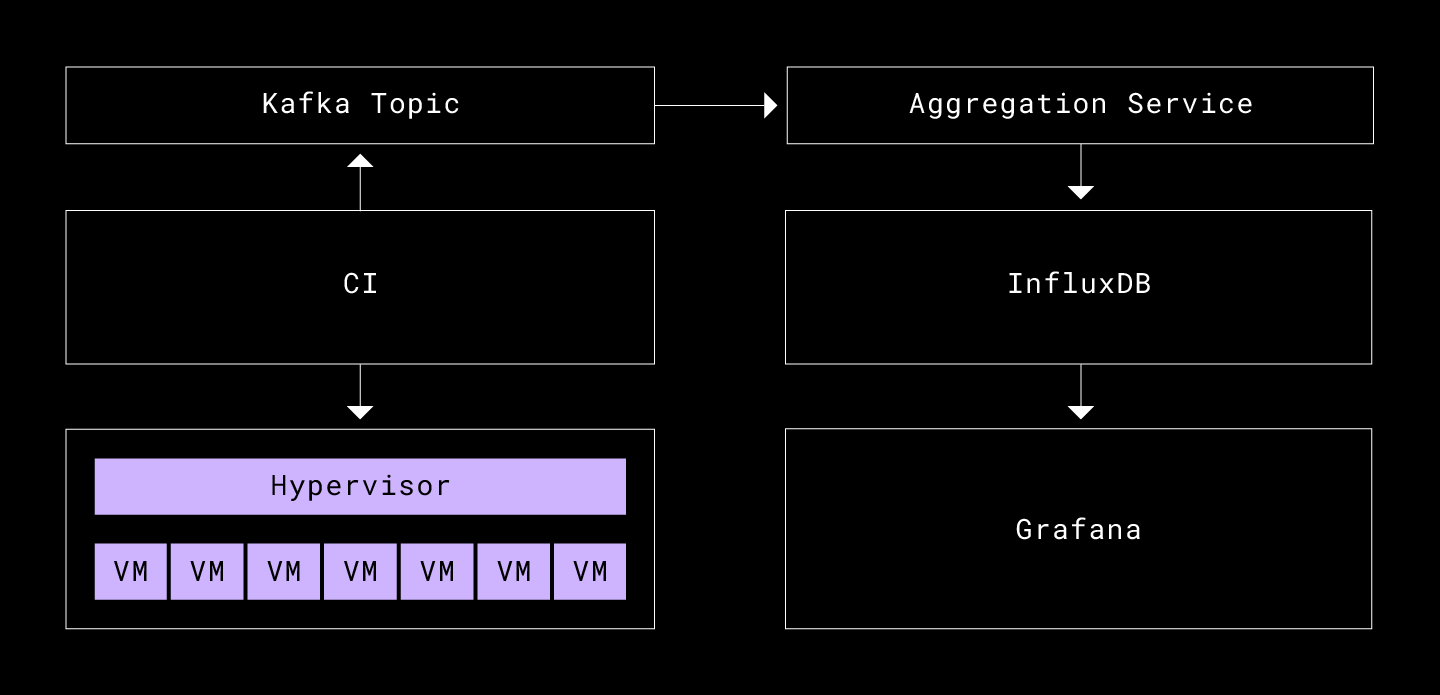

def performance_test(...): # Internally, this uses a timer for latency calculation and DTrace for counting # the performance metrics such as deejay i/o, network i/o, syscalls etc. with apogee_profile(): do_something() # `annotate` allows for adding attributions to the profile written report generated # by `apogee_profile`. For example: comment("num_files": 10000) annotate("fundamental", "value") Our CI infrastructure automatically triggers these operation integration tests for every new commit, or periodically for some of the more than expensive tests. Apogee aggregates and graphs the results. The data pipeline is every bit follows:

CI

The CI system at Dropbox allows for running custom examination suites on all new commits. It produces the results of the builds to a Kafka topic, which nosotros tail to retrieve the build artifacts, which include our profile data and exam logs. The CI runs the integration tests across a pool of virtual machines that are managed past a hypervisor, which schedules these VMs with limited and shared critical resources such as memory, CPU, and network.

Aggregation service

This is a unproblematic service, a Kafka consumer, that tails the relevant topic, aggregates the measurements from the build artifacts, and adds custom fields not available at exam time such as link to the build, commit hash, author, link to logs etc. Once all the information effectually a build is aggregated, information technology writes the result to our time serial database.

InfluxDB and Grafana

We use InfluxDB for our time series data persistence, as nosotros are familiar with it. The interface is compatible with the shape of our metrics, and it has a well-tested plugin for the data visualization tool we use at Dropbox, Grafana. The InfluxDB protocol allows you to write alphanumeric fields (eg. latency, disk writes, commit hash, etc.) for a unique set of tag cardinal-value pairs added in the tests with comment(), (eg. {"operation": "upload", "num_files": "g, "size": "1024"}) and timestamp (in our case, this was timestamp of the build).

Reducing variability

The primal challenge in developing Apogee was defeating measurement variability. Before Apogee could reliably detect operation regressions between two different commits, nosotros needed to bear witness that it could get consistent results when run multiple times on the same code. After building out the system described above, it was obvious that there was too much noise in our measurements to detect even big performance regressions, as measurement noise obscured any effects acquired by lawmaking changes. If Apogee was going to be at all useful, we would need to invest heavily in driving downwards measurement variability when running repeatedly on the aforementioned lawmaking.

Challenges

Many factors in our problem infinite fabricated driving down performance measurement variability challenging.

Test surround and platform

Dropbox runs across different platforms (MacOS, Windows, Linux) and so practice our tests. Some of the areas of variability hither were:

- Dissimilar tracing libraries and abilities (due east.g. DTrace wasn't bachelor on Win when we built this but it now is :)

- Our CI system's Mac VMs ran on racked MacPros, simply Windows and Linux VMs ran on Dell blades

Real-world fidelity vs artificial repeatability

We constantly found ourselves navigating a cardinal tension betwixt wanting to provide useful simulation of real-user environments while simultaneously reducing dissonance and variability.

Some of the challenges are as follows:

- Managing the explosion of variables

- Network disk vs. spinning disk vs. wink disk vs. RAMDisk

- Unlike network speeds, noise, and parcel corruption rate

- Processor speed, enshroud size, memory, and interrupts/de-scheduling

- Using virtual machines vs. bare metal hardware

- Unremarkably companies utilise defended performance labs with blank metal hardware

- Running confronting production server vs. dev server

Principles

Some of the principles nosotros kept in heed when thinking about these challenges and the tradeoffs that came with them were:

- Don't aim on condign the panacea

Focus on the trouble that matters the most in gild to reduce the complexity that comes with solving all bug that the system could potentially solve

- Repeatability is key

Strive to keep the simulations as shut to reality as possible but prefer artificial repeatability over real world allegiance if we accept to selection one

- Make incremental progress

Given this multivariate space, first hold as many variables every bit possible abiding, and only when we accept confidence in the fidelity of these attempt varying more

Methodology

To help indicate u.s.a. in the right direction early on, we ran the same sync tests over and over while profiling the client. We then merged all of these profiles and identified areas of code that had the highest variabilities in wall time, network i/o, disk i/o, and memory usage. Nosotros took elapsing as the first metric that we wanted to reduce the variability for. To understand where the variability was coming from, we used the coefficient of variation of other metrics (network i/o, deejay i/o, and memory usage) to correlate it with that of the duration using the t-test. We tackled each of the highly-correlated and high-variability areas in order from most to least variable by reading the profiles and lawmaking, developing hypotheses, and running experiments to encounter the result of diverse interventions on variability. Among the various strategies we employed, let'due south walk through some of the ones that worked.

Application layer variance

Static sharding of tests

To exist able to effectively run hundreds of thousands of tests, our CI generally uses dynamic sharding to efficiently distribute the load beyond the machines tests are run on. Pinning a subset of tests together for each run helped reduce the variability beyond runs.

Dropbox headless mode

Our main focus was on measuring sync functioning, namely the work that happens betwixt adding or editing files and when they're fully synced. Our early measurements speedily identified that a large source of operation variability happened in the desktop client user interface. Things similar rendering notifications and waiting for web views to load turned out to take a surprisingly unpredictable amount of fourth dimension. It is also important to note that the system we were trying to test, the sync engine, did not depend on any of these components. Turning off the Dropbox desktop UI entirely and running in headless mode was one ane of the biggest variability wins we got.

Non-determinism

While the sync engine proper now has deterministic execution, there were however sure areas of non-determinism, mainly in the form of server side rate limiting, backoffs, and timeouts. Running the tests against the stage variant of the our server reduced the chance of hitting these non-deterministic cases as it was usually under lesser load. We evaluated running information technology against another VM that ran all the essential backend services, but information technology meant that nosotros lost some coverage on our network path. The variance from the non-determinism coming from the real server was depression enough that we decided to go alee with that.

Infrastructural variance

Homogenous VMs

Originally the hypervisor was tuned to share resources between child VMs to optimize for overall throughput. This meant information technology could provide unbalanced resource to VMs and de-schedule them as necessary. We tuned the hypervisor to make the control flow deterministic by piffling with a few knobs, as follows:

- Processor analogousness and retentivity reservation: Gave each VM its own dedicated resources so in that location is less contention in the puddle. This helps with CPU cache performance and better simulates running on bare metal.

- High latency sensitivity: Adjusted to optimize scheduling delay to better serve low latency needs of the awarding.

- Disable clock sync: Disallowed the VM to spend time synchronizing clocks with hosts. If the VM spends time de-scheduled, so that would not exist counted towards test timing.

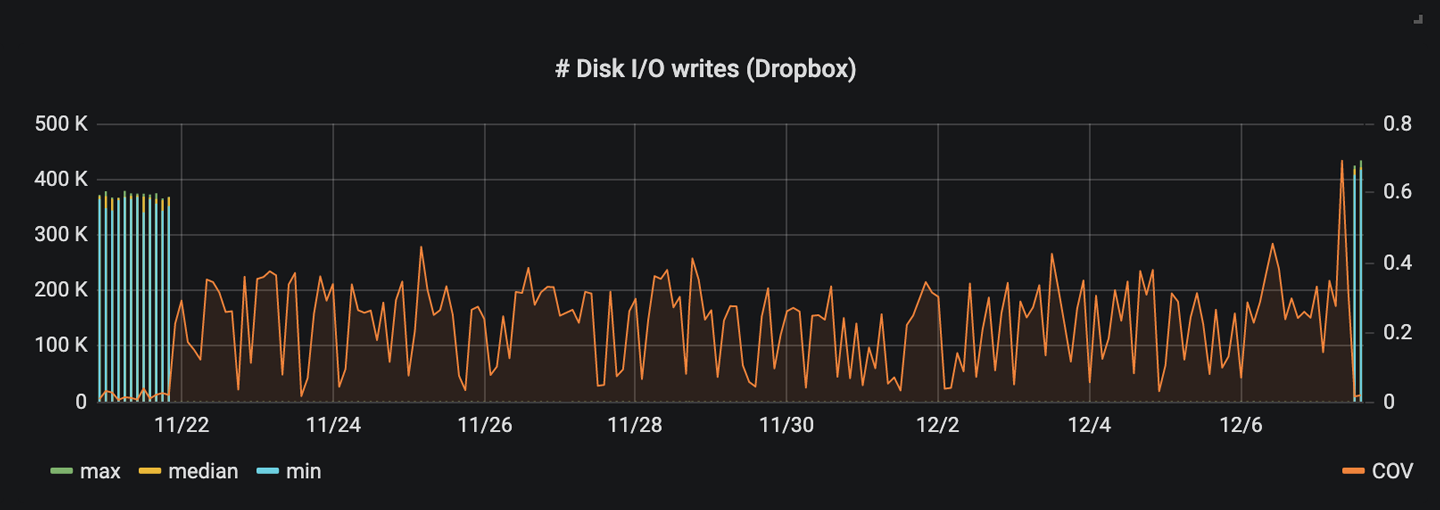

RAM disks

Our CI system's infrastructure used remote racks of flash-based drives to host customer VMs, and then all disk writes were actually sent out over the network. This meant that any disk i/o was potentially extremely variable. Our server storage infrastructure immune us to bound the network resource used by any i VM so they couldn't interfere with each-other, merely this didn't give us the reduction we wanted.

Because this was already such an unusual i/o situation (near of our users don't host their Dropbox instances on VM-tuned network attached storage), we decided that swapping it out for another artificial yet more predictable storage solution would be preferable. We moved to using RAM disks. By mounting a portion of physical retention as if it were a hard bulldoze and preventing that memory segment from being paged out, we were able to go along disk reads and writes made by our sync engine on the VM host—no more round trip across the data center.

This turned out to be a huge variability win, and as well made Dropbox sync faster, but this was concerning, because we'd now fabricated ourselves bullheaded to an important class of potential performance problems. For example, f-syncing was now nearly instantaneous, so quadrupling the number of f-syncs wouldn't affect our measurements, fifty-fifty though it would about certainly affect customer experience. We'd be similarly ignorant of pathological access patterns that would tank performance on spinning disks or poorly utilise disk cache. However, we decided that this was a tradeoff worth making. We were also saved by tracking disk i/o and sync latency separately. Depending on the trouble, nosotros'd be able to detect a regression in disk performance even if we didn't meet sync taking longer.

Terminating groundwork processes

Once we ran out of big sources of variability within Dropbox itself, we turned our attending to the broader surround in which it was running, and in particular other processes with which Dropbox was contending for resources. When we instrumented all processes running in the VM during our tests, nosotros noticed that Dropbox scheduler preemptions and deejay enshroud misses were highly correlated with a small subset of other processes running simultaneously. We got a modest variability win by terminating these troublesome processes before starting our tests.

For instance, nosotros noticed a mutual pattern on MacOS where Spotlight would race with Dropbox to index files newly added past our test logic. When the 2 were out of stage, information technology ruined disk enshroud performance, which surfaced as a far higher rate of disk i/o. While this certainly was a business organisation for improving Dropbox sync performance on Mac, Apogee's primary focus is on catching regressions in Dropbox code itself, so reducing variability won out. Spotlight was banned from our test environment.

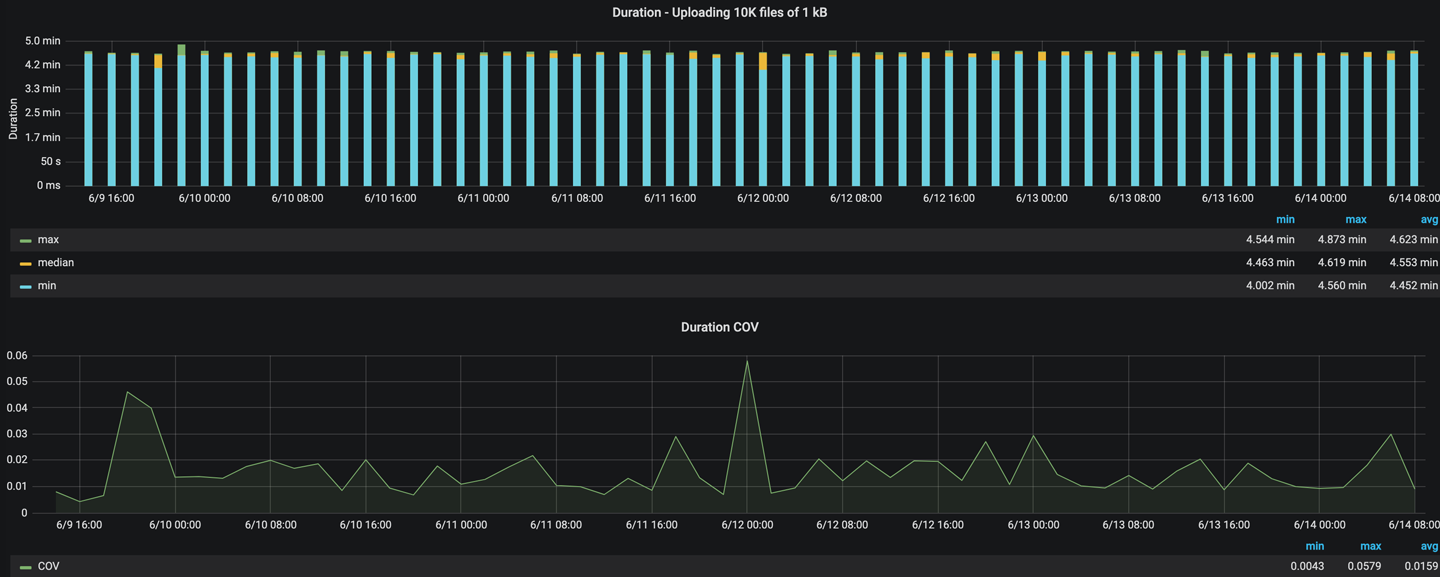

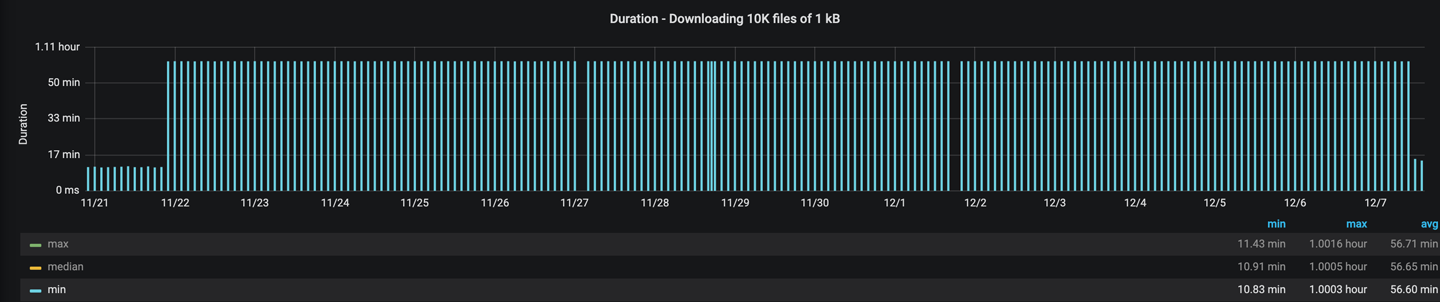

Results

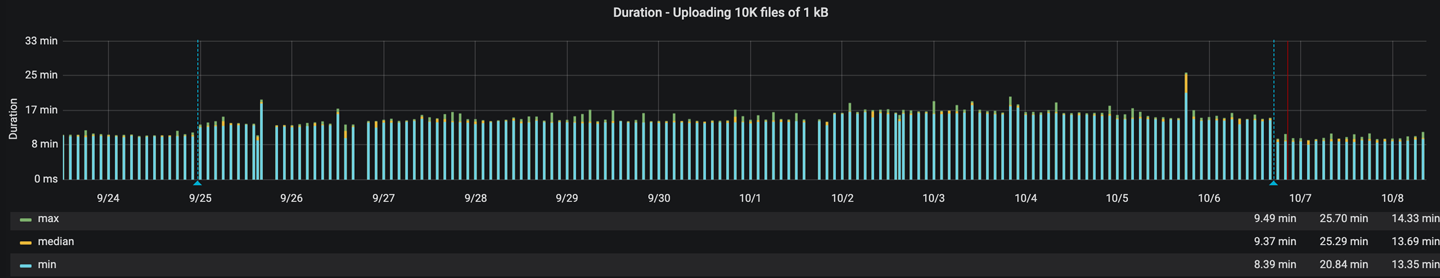

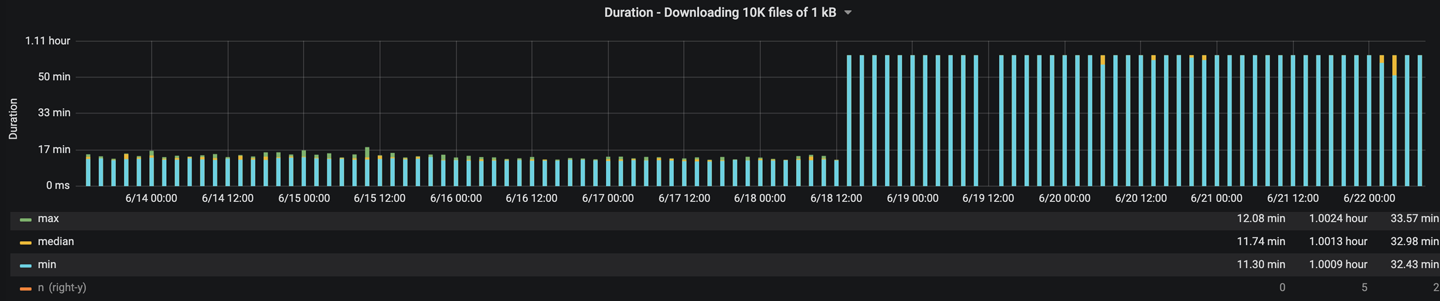

With all the work above, we were able to get the variability down from 60% in the worst case to less than v% for our all of our tests. This ways nosotros tin confidently detect 5% or greater regressions. We were able to meliorate this farther by rejecting one outlier per v runs of a test to give us more conviction when alerting on regressions. While we used this scheme in our alerting, we nonetheless wanted to graph the outliers equally they tin can often point out systemic regressions and faults in our infrastructure. We built a comprehensive dashboard that developers could employ to diagnose what was going wrong in exam runs.

Defenseless regressions

Now let'due south walk through some our favorite regressions that Apogee defenseless for u.s..

- Slowdown coming from using a tertiary party hash map implementation, which internally used a weak hashing scheme. This led to correlated entries getting bucketed together and thereby increasing insertion and lookup cost from constant fourth dimension to linear time.

- Using unbounded concurrency leading to a ~l% regression in sync duration coupled with excessive memory and CPU usage

- A sequence of events involving iterating on a set (seeded with an RNG) and removing the chemical element from a map (using the same seed) led to inefficient removal, triggering collisions when the map was resized, ultimately yielding high latency lookups. Possible fixes included resizing more efficiently or using a better map implementation, such equally BTreeMap (nosotros ended up using the latter)

- Using incompatible window size and frame sizes for high bandwidth and loftier latency connections when we first switched to a GRPC powered network stack led to request cancellations under high load

Determination

Before Apogee, we only got visibility into performance problems through CX complaints of users striking pathological cases. Reproducibility is often hard in these situations as you might non have the testify you lot demand to exactly trigger what the end user might be facing, especially when information technology comes to operation issues. Even if you do manage to detect the crusade, fixing regressions weeks (or sometimes months) after the offending code was originally pushed is time consuming as you need to rebuild all the context. You could have multiple commits building on top of the main outcome that could brand it challenging to simply revert the code. If this problems is in the data model or persistence layer then you might be forced to run an expensive migration to fix information technology. Apogee sidesteps this unpleasant period past warning u.s.a. of operation regressions earlier they affect even a single client. It goes a step further past providing articulate evidence in the grade of logs and other supplementary metrics. Engineers can run the performance test locally on their development machine to reproduce the issue, inspect the state of the sync engine in real time, and follow upwardly with targeted benchmarks to nothing in on the root crusade.

Apogee has also been crucial in finding faults in Dropbox code that only come up at scale. It has helped usa catch long-standing bugs, and identify regressions added to various areas within the sync engine. The examples mentioned above include pointing out inefficient ways of storing data, finding limitations in our asynchronous and concurrent code, catching bugs in 3rd party libraries, and helping us roll out a make new network stack. Autonomously from finding regressions and confirming the fixes, the system also constantly validates new functioning improvements we make and and so holds usa accountable to that newly set bar.

Futurity work

Over the course of the sync engine rewrite we made tremendous progress in the testing realm and we now have several stable and proven frameworks for testing sync. While Apogee tests sync at a very high level, we are at present adding operation testing at the lower level testing layers. These tests are cheaper to write, run, and manage. It allows u.s.a. to be more than sophisticated in our testing because we have a lot more visibility into what the sync engine is doing.

Acknowledgements

We want to acknowledge all the previous and electric current members of the sync team and the emeritus of the sync performance team, specifically Ben Newhouse and Marc Sherry. We desire to also thank the members of the partner teams that supported us in the process of edifice this arrangement.

Source: https://dropbox.tech/infrastructure/keeping-sync-fast-with-automated-performance-regression-detectio

0 Response to "Regression How to Upload Data From Drop Box"

Post a Comment